My Internship Journey at CyberForce: Building an AI Security Strategy

This summer, I had the privilege of joining CyberForce in Istanbul as an AI Security Strategist intern.

It was an eight-week journey (July–September 2025) that combined technical deep dives, professional mentorship, and numerous personal lessons. Meanwhile, I was equally preparing to transition from Turkey to Germany for the second year of my Erasmus Mundus CYBERMACS master’s program.

What started as an intimidating challenge, to “design a security guidebook for AI systems”, became one of the most rewarding experiences I’ve had in my academic and professional path.

In this blog, I want to share that journey: the technical details, the real-life lessons, and the fun little moments that made it uniquely mine.

Why AI Security? Why Now?

AI systems are no longer futuristic entities or something of a faraway future. They power everything from healthcare diagnostics to financial trading, autonomous vehicles, and even national security systems.

But like every technology, AI comes with risks:

- Prompt injection attacks that trick large language models into behaving maliciously.

- Data poisoning that corrupts training data.

- Model extraction, where attackers steal or replicate proprietary AI models.

Traditional cybersecurity frameworks often fail to address these scenarios. Therefore, AI security is becoming its own specialised domain, bridging machine learning risks with established cybersecurity practices.

That’s where my role came in.

My Role as AI Security Strategist

At CyberForce, my mission was clear (and slightly terrifying at first😅):

“Create our company’s official AI Security Guidebook — a methodology and checklist that defines the steps to take and the risks to look for when testing AI systems.”

This meant I had to:

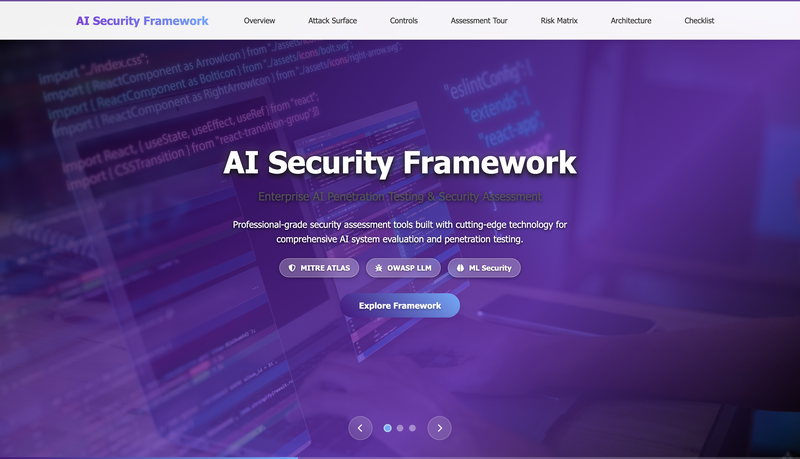

- Research global best practices (like MITRE ATLAS, OWASP LLM Top 10).

- Design a framework and risk matrix tailored for AI systems.

- Build an interactive web prototype to showcase how it all worked.

- Integrate case studies from teammates working on offensive AI testing and AI supply chain security.

Basically, I was part strategist, part architect, and part hands-on builder. (I know right, it sounds as cool as it was 😁)

Breaking Down the Framework

The framework I designed had three pillars:

- Methodology & Checklist

- A structured process for auditing AI systems.

- Security controls mapped to OWASP LLM Top 10 risks like prompt injection, insecure output handling, and sensitive data disclosure.

- A structured process for auditing AI systems.

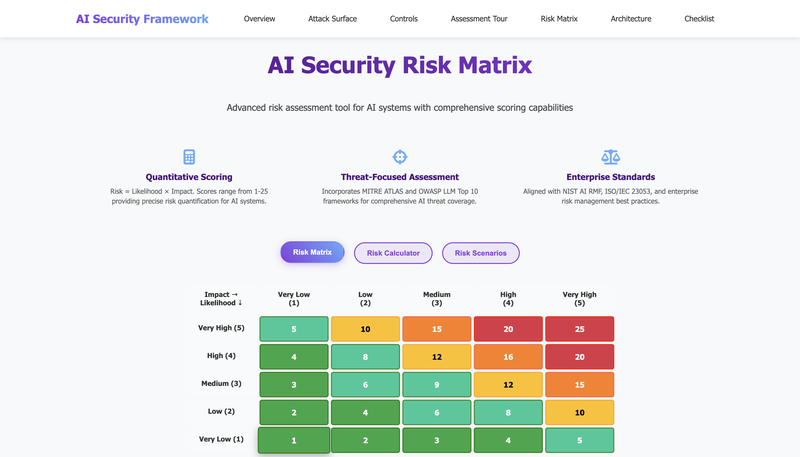

- Risk Matrix

- A dynamic scoring system combining:

- Threat type (adversarial attack, poisoning, extraction, bias exploitation, privacy leakage).

- Asset criticality.

- Attack likelihood.

- Business impact.

- Threat type (adversarial attack, poisoning, extraction, bias exploitation, privacy leakage).

- Output: a clear risk alert level to guide prioritisation.

- A dynamic scoring system combining:

- Interactive Prototype

- A web-based tool (HTML5, CSS3, JavaScript, Node.js backend).

- Simulated an AI security audit workflow with checklists, scoring, and export features.

- A web-based tool (HTML5, CSS3, JavaScript, Node.js backend).

The Work Behind the Scenes

Research & Foundations

My first two weeks were all about immersion: digging through resources like MITRE ATLAS, the OWASP LLM Top 10, NIST AI RMF, Google SAIF, CSA AI Control Matrix and other industry whitepapers, such as from KPMG and Microsoft.

Honestly, what I quickly realised is that AI security is not just cybersecurity with a twist.

It has its own world, requiring us to rethink assumptions about input/output validation, dependency trust, and adversarial behaviour.

Building & Debugging

By week three, I was deep in full-stack development mode.

Imagine writing over 6,000 lines of JavaScript, tweaking CSS, and trying to get export functionality to stop breaking… (spoiler: it did, more than once).

Copilot sometimes helped — and sometimes broke everything. At one point, I had to start over from backups.

Painful, but also a reminder of the importance of version control.

I learned to:

- Use Git branching strategies for safer experimentation.

- Containerise deployments with Docker.

- Automate builds with CI/CD pipelines and deploy via Netlify.

Feedback & Iteration

One highlight was our midpoint presentation to supervisors. They gave us sharp feedback, specifically for me, it was:

- “Be more specific to AI security - not just general cybersecurity.”

- “Define the "why" for example, why you chose certain security controls (e.g., LLM01 vs others).”

- “Consider concurrency in checklists (Here I thought of the learning paths flow, where one step unlocks the next).”

I left with a clear to-do list: streamline processes, add benchmarks, and make the framework production-ready.

The Fun, Human Side

It wasn’t all code and risk matrices. Some of my favourite memories came from small, human moments:

- The key card moment: My supervisor trusted me with his office card after a late meeting. (Yes, it felt cool.)

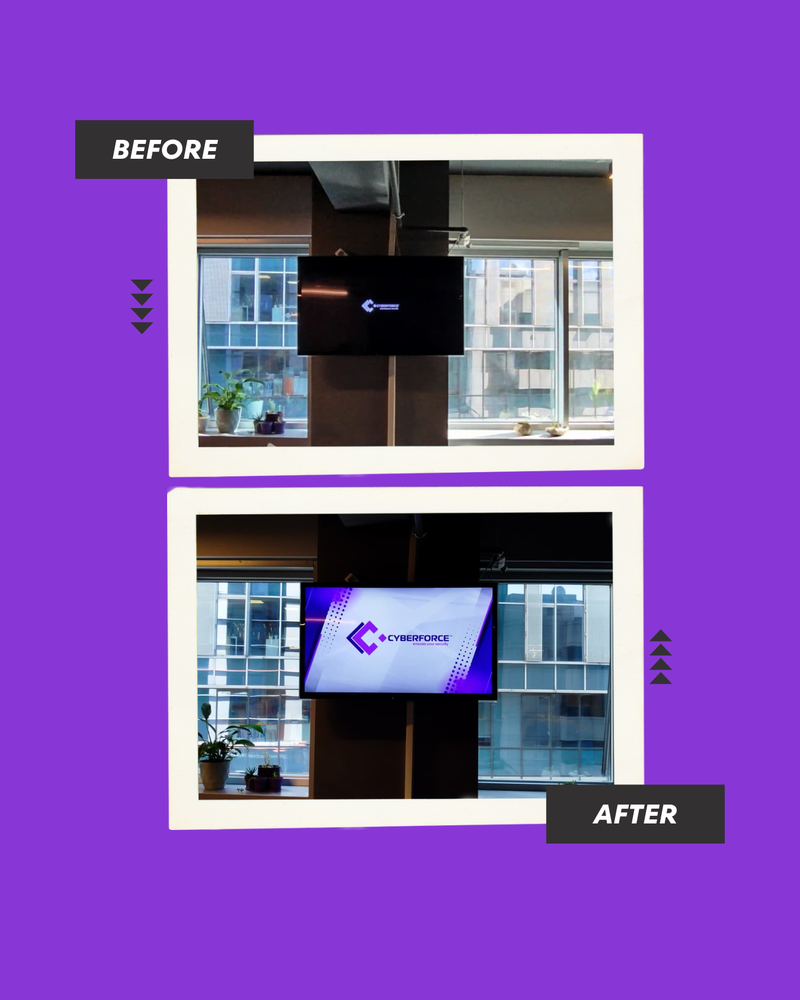

- Logo animation: I designed an animated welcome graphic for our framework, and they actually agreed to use it! Tiny win, huge morale boost.

Before/after graphic of welcome screen design with logo animation

- Conversations at lunch: From music debates (Radiohead vs Pink Floyd) to cybersecurity in movies (“Hollywood hacking”), these chats made me feel part of the team.

- Inside joke: Leaving my computer open, only to find a cheeky message typed in by a colleague: “Be careful when you work in a cybersec office.” Lesson learned. 😂

Certifications & Learning Resources

Beyond my day-to-day project work, I invested time in some certifications and structured learning to deepen my expertise.

Two milestones stand out:

- ISO/IEC 27001:2022 Lead Auditor – Issued by Mastermind Assurance. This certification equipped me with a strong understanding of information security management systems (ISMS), governance principles, and auditing practices. It was particularly useful when thinking about compliance and governance aspects of AI security, since frameworks must not only be technically sound but also auditable and aligned with standards.

- AI Security & Governance Certification – Issued by Securiti, Education. This program covered responsible AI adoption, governance models, and practical controls for securing AI systems. It complemented my technical framework work by grounding it in governance and accountability, two pillars of AI security that are often overlooked.

Resources That Shaped My Work

A lot of my framework design came from digging into community and industry resources. They helped me with both the technical and governance aspects of my project.

Here are some that proved invaluable:

Core Frameworks & Standards

- OWASP LLM Top 10 → https://genai.owasp.org/llm-top-10/

- OWASP AI Security & Privacy Guide → https://owasp.org/www-project-ai-security-and-privacy-guide/#how-to-address-ai-security

- OWASP AI Red Teaming Initiatives → https://genai.owasp.org/initiatives/#ai-redteaming

- MITRE ATLAS (Matrix) → https://atlas.mitre.org/matrices/ATLAS

- MITRE ATLAS (Case Studies) → https://atlas.mitre.org/studies

- NIST AI Risk Management Framework (AIRM) → https://www.nist.gov/itl/ai-risk-management-framework

- ISO/IEC 27090 Draft → https://www.iso.org/obp/ui/en/#iso:std:iso-iec:27090:dis:ed-1:v1:en

- Cloud Security Alliance AI Controls Matrix → https://cloudsecurityalliance.org/artifacts/ai-controls-matrix

- Google Secure AI Framework (SAIF) → https://saif.google/secure-ai-framework

Courses & Trainings

- Microsoft Learn – AI Security Fundamentals Path → https://learn.microsoft.com/en-us/training/paths/ai-security-fundamentals/

- Microsoft Learn – AI Security Controls Module → https://learn.microsoft.com/en-us/training/modules/ai-security-controls/2-review-ai-open-source-libraries

- YouTube – AI Security Fundamentals Intro → https://youtu.be/iWnYSdj9rxE

- AI Red Teaming 101 (YouTube series shared by mentor) → https://m.youtube.com/watch?v=DwFVhFdD2fs

- TryHackMe – AI/ML Security Threats Room → https://tryhackme.com/room/aimlsecuritythreats

Industry Insights

- Practical DevSecOps: Best AI Security Frameworks → https://www.practical-devsecops.com/best-ai-security-frameworks-for-enterprises/

- KPMG Cybersecurity Strategy & Governance → https://kpmg.com/us/en/capabilities-services/advisory-services/cyber-security-services/cyber-strategy-governance/security-framework.html

- KPMG Infographic (Security Framework PDF) → https://kpmg.com/kpmg-us/content/dam/kpmg/pdf/2022/security-framework.pdf

- IBM AI Security Overview → https://www.ibm.com/think/topics/ai-security

- IBM – Large Language Models Insights → https://www.ibm.com/think/topics/large-language-models

And a lot more like articles, white papers and others. Tools like ChatGPT and Claude became part of my research process. I used them to brainstorm improvements, reframe checklist logic, and sanity-check my methodology. Of course, they weren’t a substitute for industry frameworks like MITRE ATLAS or OWASP, but they were invaluable sparring partners, pushing me to think about “what if” scenarios or alternative approaches. Also, I often leaned on GitHub Copilot to speed up repetitive coding tasks or to suggest fixes when debugging export functionality issues.

Overall, these resources became my toolbox, guiding both the practical and governance layers of the AI Security Guidebook I developed.

Technical Skills Gained

- Framework Design & Risk Modeling – Created a structured AI security methodology with a dynamic risk matrix.

- Web Development – Built an interactive prototype using JavaScript, HTML5, CSS3, Node.js, and Express.

- AI Security Testing – Applied MITRE ATLAS and OWASP LLM Top 10, explored adversarial attacks like prompt injection and model extraction.

- DevOps Practices – Git branching, Docker containerisation, CI/CD pipeline setup, and Netlify deployment.

- Debugging & Code Quality – Fixed export bugs, added error handling, and applied defensive programming practices.

Soft Skills & Training

- Collaboration in a Hybrid Workplace – Balancing in-office teamwork with “remote days” (yes, quotes intentional 😅, iykyk).

- Presentation & Reporting – Delivered progress presentations, integrated feedback, and wrote a formal guidebook.

- Self-Learning Discipline – Completed external trainings like Lead Auditor Course and AI Security Governance by Securiti, and needed to push myself to complete the weekly tasks, and the overall project with little or no reminders.

- Problem-Solving & Adaptability – Learned to pivot when code broke (often), or when motivation dipped, waiting on visa updates.

- Mentorship & Networking – Engaged in meaningful mentorship, from SIGINT practice to discussions about AI red teaming and telco security.

Contributions to CyberForce

While my framework may remain a proposal rather than an adopted product, my work added value by:

- Prototyping CyberForce’s first AI-specific security assessment methodology.

- Proposing a dynamic, AI-focused risk matrix for threat evaluation.

- Developing a working interactive framework as proof-of-concept.

- Integrating teammate outputs as case studies to create a holistic AI security strategy.

In short: I helped kickstart CyberForce’s conversation around AI security in a structured, tangible way. (I like to think of it this way, haha😅)

Lessons Learned & Reflections

If there’s one thing this internship taught me, it’s that AI security is messy, fascinating, and very human. It requires technical precision and creative problem-solving. I learned to:

- Adapt existing security concepts for new AI risks.

- Balance perfectionism with progress (sometimes “done” is better than “perfect”).

- Value mentorship and team culture as much as technical output.

A personal note?

Some days I was tired, bored, even frustrated. Other days, I felt unstoppable. That’s the reality of real-world projects, and I guess it is what makes them valuable.

What’s Next?

As I was wrapping up the internship, I was preparing to move to Germany for the second year of my Erasmus Mundus CYBERMACS program, starting October 1st.

I’ll continue exploring AI security, and I’m especially interested in how it intersects with space cybersecurity and governance for my thesis. (maybe maybe 😁)

Oh, and the project isn’t ending here — you can still explore it:

In case you want to check it out, my internship report

Closing Thoughts

As I wrap up this chapter, I want to pause and express my gratitude. First, a heartfelt thanks to Ender Gezer, CTO of Cyberforce, for giving me this opportunity and trusting me to contribute to such a forward-looking project. His guidance as both a supervisor and mentor shaped my approach to AI security in ways I’ll carry forward for years.

I’m equally grateful to Tunahan Tekeoğlu, whose mentorship, patience, and feedback helped me refine my work, think critically, and keep pushing toward a production-ready framework.

A special thanks also to my fellow CYBERMACS colleagues (Adolfo and Danium - hearts to you 😊) who interned alongside me at Cyberforce, it made the journey more collaborative, more fun, and a lot more memorable. And of course, to the wider Cyberforce team and all the great people I met along the way — thank you for welcoming me, sharing your knowledge, and reminding me that cybersecurity is not just about technology, but also about people.

This internship was more than just a project. It was an experience of growth, teamwork, and discovery. Definitely, it is one I’ll always look back on with appreciation. ❤️

I believe internships are never just about the work but equally about growth, people, and moments you’ll remember.

For me, CyberForce was all of that: technical challenge, mentorship, community, and a push out of my comfort zone.

To anyone curious about AI security, or wondering if they should take the leap into a specialised, evolving field: do it.

You’ll learn more than you expect (I sure did ;-)), about systems, about security, and about yourself.

Adolfo, Danium and Regine at Cyberforce